EDaWaX-Study on Data Policies of Journals in Economics and Business Studies (Part I)

Posted: January 14th, 2015 | Author: Sven | Filed under: Data Policy, EDaWaX, journals | Tags: study, WP2 | 1 Comment » One work package (WP2) of EDaWaX’s second funding phase deals with a broader analysis and comparison of journals’ data policies in economics and business studies. In the project’s first funding phase we already have conducted a similar survey, but it primarily focused on journals in economics.

One work package (WP2) of EDaWaX’s second funding phase deals with a broader analysis and comparison of journals’ data policies in economics and business studies. In the project’s first funding phase we already have conducted a similar survey, but it primarily focused on journals in economics.

Because research data and methodology in business studies are not necessarily identical to those employed in economics, we found it to be important to compare journals’ data policies of both branches of economic research.

Methodology of our study

To compile a sample for our analyses we used several lists of academic journals assembled by German economic associations. For instance, we included the JOURQUAL 2.1 list for journals in business studies. The JOURQUAL ranking is the outcome of a survey among the members of the German Academic Association for Business Research (VHB) in fall 2010. In total 848 association members participated (at least partially) in the extensive questionnaire. The VHB members were asked to rate 838 journals in total. In addition, (again) we included the sample of journals used by Bräuninger, Haucap and Muck, which primarily focuses on journals in economics.

Because the JOURQUAL-list already contains 838 journals, we had to select a subsample, simply because we do not have the resources to analyse all of these journals.

Therefore, we chose all journals from the JOURQUAL-list ranked with A+, A and B. This selection criterion is based on the results of our analyses in project phase 1, during which we found that primarily high-ranked journals are equipped with data policies. Using this approach, 258 out of 838 journals remained in the sample. Over and above we randomly selected 60 journals that have been rated with C, D or E. With the aid of this subsample, we again wanted to check whether our assumptions regarding the interrelation of highly-ranked journals and the existence of data policies is correct.

The sample of Bräuninger, Haucap and Muck (it contains 153 journals) was entirely added to our research sample.

In the next step, we removed double entries (many journals of the Bräuninger, Haucap and Muck sample are also included in the JOURQUAL-list). Additionally, we checked the “aims and scope” section of each journal to find out whether the particular journal generally publishes empirically based studies and research papers. Journals publishing only theoretical papers or papers based on policy debates were removed from our sample.

Due to the outcome of this examination, the sample’s size slightly decreased: In total we had 346 journals in our database, which still is a very big sample compared to similar analyses.

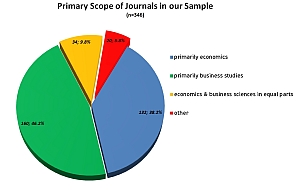

In contrast to our approach in the first funding phase, we also determined the primary scope of all journals in our sample. Such a subject categorization was necessary to specify the primary scope of a journal. With such a classification we were able to differentiate the results of our study by the subdomains of economic research. The lists of journals provided by professional associations are not sufficient for this purpose, because they do not distinguish accurately between subject categories.

Therefore, we employed the subject categories used by Thomson Reuters Journal Citation Report (JCR). In the event of more than one subject category being listed in the JCR, we used both in cases where all subject categories are derived from the broader field of economic research (’economics & business in equal parts’). In the event of only one of the categories being dedicated to the field of economic research, we only used this subject category (either ‘primarily economics’ or ‘primarily business studies’).

In the case of none of the subject categories being dedicated to economic research, we assigned the journal to a scope called ‘other’.

For journals not listed in the JCR we employed the indexing guidelines of ZBW (German National Library of Economics/Leibniz Information Centre for Economics).

Because one of the goals of our work package is to determine some characteristics of journals equipped with data policies, we also collected further information on the journals in our sample. For instance, we collected the impact factor of these journals (if available), and the rating both in the JOURQUAL 2.1 (2011) and in the Handelsblatt ranking (2012).

Subsequently, we checked the website(s) of the journals (in some cases there are two websites for one journal – the publisher’s website and a website maintained by the editors) for data policies. In the cases we found a guideline, we categorized it along with some criteria we developed during the first funding phase of EDaWaX.

Some characteristics of our sample

Based on this approach, we were able to determine that 46.2% of all journals in our sample primarily belong to the subject category of business studies and 38.2% to economics. 9.8% are open to submission from both economics and business studies in equal parts. 5.8% are primarily associated with other subject categories (for example psychology, mathematics, operations research or sociology).

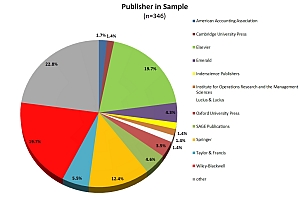

When we had a look at the major publishers in our sample, we were able to determine that the three biggest of them are the ’usual suspects’: 19.7% of all journals in our sample are published by Wiley-Blackwell and the same percentage is published by Elsevier. In the third place Springer follows with 12.4%.

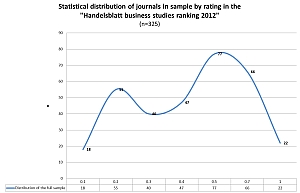

Fig. 3: Statistical distribution of journals in our sample by rating in the Handelsblatt ranking 2012 (n=325) (click to enlarge)

When taking a look at the statistical distribution of the journals in our sample, we noticed that the biggest group is rated with a 0.5 in the Handelsblatt ranking – an important ranking for German economists. In total, more than 50% of all journals are among the three best rated groups. Hence, better rated journals are disproportionally represented. Nevertheless, approx. 35% of the journals are among the three lower rated groups. Moreover, 21 journals in our sample are not considered in the Handelsblatt ranking. Most often the reason is that these journals belong to a group that is not (yet?) indexed in many rankings, probably because they do not appear important enough to be indexed. Therefore the extent of lower ranked journals in our sample is around 38%.

According to our analyses, the median of the full sample is 1.372 for Thomson Reuters Impact Factor (mean: 1.981), 7.410 for the JOURQUAL2.1 ranking (mean: 7.442) and 0.500 for the Handelsblatt ranking (mean: 0.462).

…End of part I – in part II we will present some results of our study…

The findings (or part II, as I called it above) of this study are available as a separate paper (part of the conference proceedings of the ELPUB2015).

Photo: Own work. License: CC-BY-SA 3.0

Charts: Own work. License: CC-BY-SA 3.0

Part II will be published in September. I will present some of the results we obtained at the ELPUB2015-conference in Malta.

Our paper will be part of the conference proceedings (I will attach the paper to this blog of course).

Thank you for your patience!